Create Spark RDD Using Parallelize Method – Step-by-Step Guide

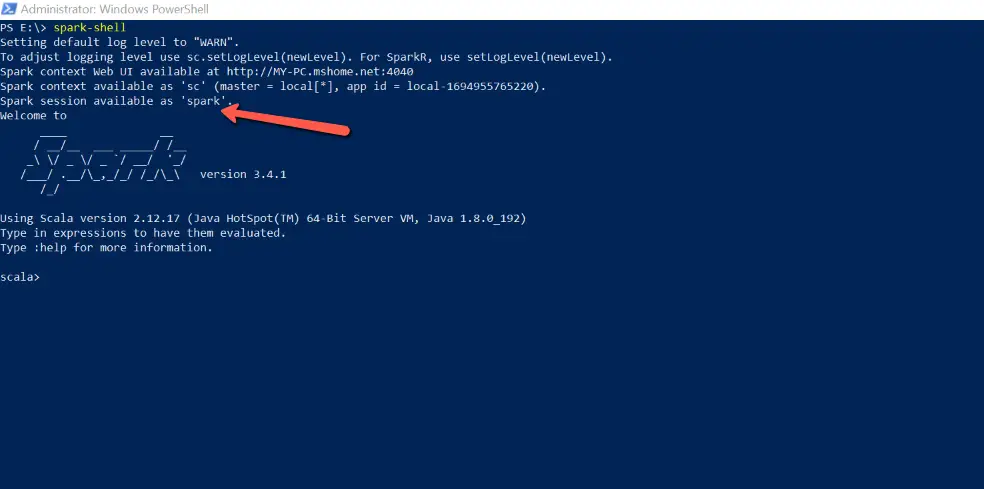

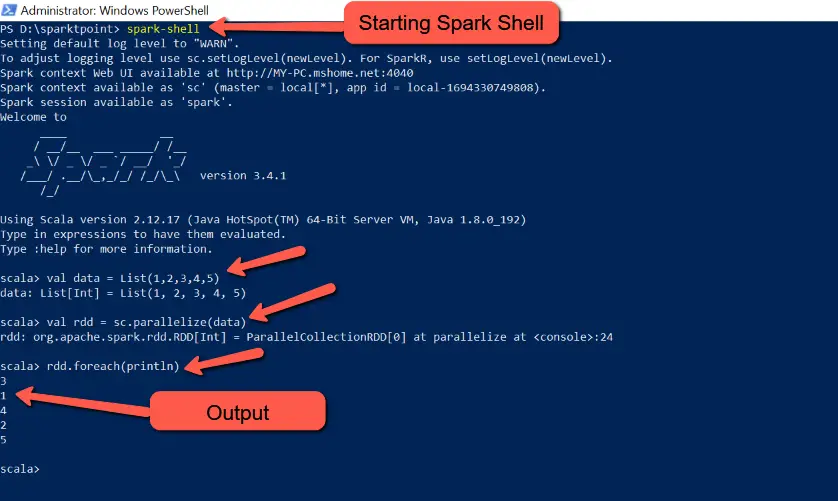

In Apache Spark, you can create an RDD (Resilient Distributed Dataset) using the SparkContext’s parallelize method. This method allows you to convert a local collection into an RDD. An RDD, or Resilient Distributed Dataset, is a fundamental data structure in Apache Spark. It’s designed to handle and process large datasets in a distributed and fault-tolerant …

Create Spark RDD Using Parallelize Method – Step-by-Step Guide Read More »