Spark’s array_contains Function Explained

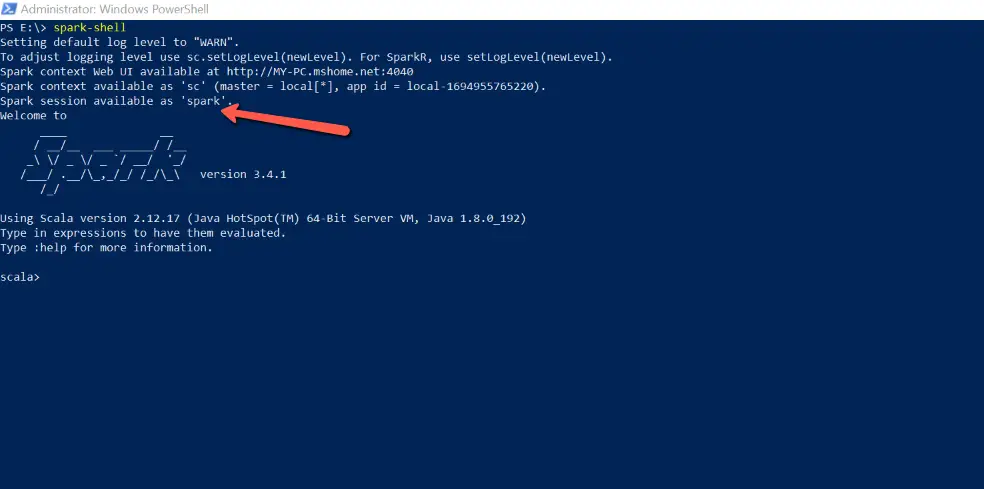

Apache Spark is a unified analytics engine for large-scale data processing, capable of handling diverse workloads such as batch processing, streaming, interactive queries, and machine learning. Central to Spark’s functionality is its core API which allows for creating and manipulating distributed datasets known as RDDs (Resilient Distributed Datasets) and DataFrames. As part of the Spark …