SparkContext has been a fundamental component of Apache Spark since its earliest versions. It was introduced in the very first release of Apache Spark, which is Spark 1.x.

Apache Spark was initially developed in 2009 at the UC Berkeley AMPLab and open-sourced in 2010. The concept of SparkContext as the entry point for Spark applications has been a fundamental part of Spark’s architecture from its inception, and it has remained a key component throughout Spark’s evolution.

So, SparkContext has been present since the beginning of Apache Spark and is not associated with any specific version introduction; it has been there from version 1.x onward.

SparkContext was the primary entry point for Spark functionality in versions 1.x and 2.x. However, it has been mostly replaced by SparkSession in Spark 2.0 and later.

What is SparkContext

SparkContext is the entry point to the Apache Spark cluster, and it is used to configure and coordinate the execution of Spark jobs. It is typically created once per Spark application and is the starting point for interacting with Spark.

You can have only one active SparkContext instance in a single JVM. Attempting to create multiple SparkContext instances in the same JVM will result in an error.

Here’s an example of how to create a SparkContext and use it in a simple Scala Spark application:

import org.apache.spark.{SparkConf, SparkContext}

object SparkContext {

def main(args: Array[String]) {

// Create a SparkConf object to configure the Spark application

val conf = new SparkConf().setAppName("MySparkApp").setMaster("local")

// Create a SparkContext

val sc = new SparkContext(conf)

// Parallelize a list and perform a simple transformation

val data = List(1, 2, 3, 4, 5)

val rdd = sc.parallelize(data)

val squaredRdd = rdd.map(x => x * x)

println(squaredRdd.collect().mkString(", ")) // Collect the results and print them

// Stop the SparkContext when you are done

sc.stop()

}

}In this code:

- We import the

SparkConf,SparkContextclass from the org.apache.spark . - We create a

SparkConfobject called conf with two parameters:"MySparkApp": This is the name of our Spark application. Passing insetAppNamemethod."local": This tells Spark to run in local mode, which is suitable for development and testing on a single machine. Passing insetMastermethod.

- We demonstrate one simple examples of using Spark:

- We parallelize a list of numbers, map a function to square each number, and then collect the results.

Finally, we stop the SparkContext when we’re done using it. In a real Spark application, you would typically submit your code to a Spark cluster, and the SparkContext would be automatically created for you by the cluster manager (e.g., YARN or Kubernetes).

SparkContext in SparkSession

SparkSession is introduced in Spark 2.x. In Apache Spark, the SparkSession actually encapsulates and manages the SparkContext internally. When you create a SparkSession, it automatically creates and configures a SparkContext for you, so you don’t need to create a separate SparkContext instance.

Here’s how it works:

- When you create a

SparkSession, it internally configures and creates aSparkContextas part of its initialization. - The

SparkSessionprovides a higher-level API for working with structured data using DataFrames and Spark SQL. It abstracts away many of the low-level details of managing Spark configurations and resources, making it easier to work with structured data. - You can access the underlying

SparkContextfrom theSparkSessionif needed, but in most cases, you won’t need to interact directly with theSparkContextwhen using aSparkSession.

Here’s an example in Scala:

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.getOrCreate()

// You can access the SparkContext via the SparkSession

val sc = spark.sparkContext

// Now you can use 'spark' for DataFrame and SQL operations

// And you can use 'sc' for lower-level Spark operations if necessary

println(sc)

}

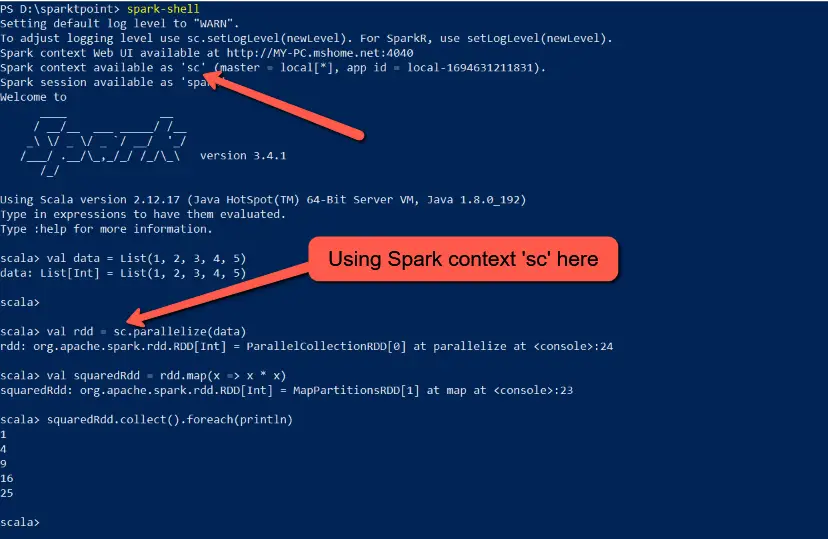

}SparkContext in spark-shell

In Spark, when you are using the spark-shell, you don’t need to explicitly create a SparkContext as it is automatically created for you. The spark-shell is an interactive shell that provides a Spark environment for you to run Spark commands and Scala or Python code without having to manage the SparkContext yourself.

When you launch spark-shell, a SparkContext is created under the variable name sc, and it is ready for use immediately. You can start running Spark operations and Spark SQL queries directly in the shell without the need to set up a SparkContext manually.

Here’s an example of how you would use the spark-shell:

- Open your terminal.

- Run

spark-shell: - Once the

spark-shellis started, you will see the Spark logo and version information, and you can start using Spark context available as ‘sc’.

In Python (PySpark), the process is similar:

- Open your terminal.

- Run

pyspark: - Once the

pysparksession is started, you will have access to theSparkContextvia thescvariable, and you can use it to run Spark operations.

# Create an RDD and perform operations on it

data = [1, 2, 3, 4, 5]

rdd = sc.parallelize(data)

squared_rdd = rdd.map(lambda x: x**2)

print(squared_rdd.collect())The spark-shell and pyspark interactive shells make it convenient to experiment with Spark code and perform data analysis without the need to explicitly create and manage the SparkContext.

Key Points:

- Legacy Code: If you’re working with older Spark 1.x or 2.x code, you’ll likely encounter SparkContext.

- Specific Use Cases: SparkContext might still be used in certain scenarios like working with RDDs directly or accessing lower-level cluster functionalities.

- New Development: For new Spark applications, it’s strongly recommended to use SparkSession.

Conclusion

In conclusion, SparkContext is a fundamental component in Apache Spark that serves as the entry point to a Spark cluster and is responsible for coordinating and managing Spark applications.

Overall, SparkContext plays a central role in Spark applications by providing the foundation for distributed data processing, job coordination, and resource management. Understanding how to create, configure, and use SparkContext is essential for effectively developing and managing Spark-based data processing applications.

Frequently Asked Questions (FAQs)

What is SparkContext in Apache Spark?

SparkContext is the entry point and a crucial component of an Apache Spark application. It represents the connection to a Spark cluster and is responsible for coordinating the execution of Spark jobs.

Do I need to create a Spark Context for every Spark application?

Typically, you create one SparkContext per Spark application. It’s a good practice to have a single SparkContext for the entire application’s lifetime.

How do I configure Spark properties using SparkContext?

You can set various Spark properties using the SparkConf object before creating the SparkContext. Properties such as the master URL, application name, and memory settings can be configured this way.

What happens when I call sc.stop() ?

When you call sc.stop(), it stops the SparkContext and releases allocated resources. It’s important to stop the SparkContext when you are done with your Spark application to free up cluster resources.

What are some common use cases for Spark Context?

Common use cases for SparkContext include creating RDDs (Resilient Distributed Datasets), configuring Spark applications, managing Spark jobs, and controlling Spark resources.