SparkSession is the entry point for using Apache Spark’s functionality in your application. It is available since Spark 2.0 and serves as a unified way to interact with Spark, replacing the previous SparkContext, SQLContext, and HiveContext. In this article , we’ll explore the role of SparkSession, its importance, and why mastering it is essential for unlocking the full potential of Apache Spark.

Access SparkSession in spark-shell

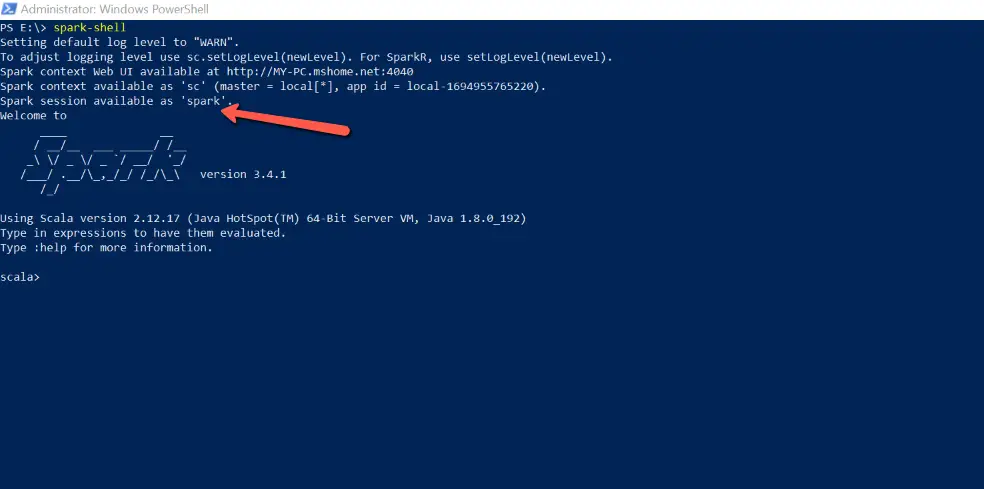

In Apache Spark, you can also use SparkSession within the spark-shell, which is an interactive shell for Spark. The spark-shell provides a convenient way to interactively explore and experiment with Spark features and APIs.

Here’s how you can use SparkSession in the spark-shell:

- Start the

spark-shell:Open a terminal and run thespark-shellcommand to launch the interactive Spark shell. - Access the

SparkSession: Once thespark-shellis started, an activeSparkSessionnamedsparkis automatically available for you to use. You can start using it without any additional setup.

SparkSession in Scala Code Example

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.config("spark.some.config.option", "config-value")

.getOrCreate()

}

}Importing SparkSession: To use SparkSession, you need to import it and create an instance: You can configure various Spark settings using .config() method. In this example, we set the application name and a custom configuration option.

Create New Spark Session

In Apache Spark, you can create a new SparkSession that is independent of the existing one by using the newSession() method. This method creates a new session with the same configuration as the original SparkSession but with a different underlying SessionState. This can be useful when you want to isolate different parts of your application and avoid interference between them. Here’s how you can use the newSession() method in Scala:

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.getOrCreate()

// Create a new session based on the original SparkSession

val newSpark = spark.newSession()

// Perform operations with the new session

println(spark)

println(newSpark)

// Don't forget to stop both sessions when you're done

spark.stop()

newSpark.stop()

}

}

// Output

//org.apache.spark.sql.SparkSession@33eb6758

//org.apache.spark.sql.SparkSession@f8a6243Reading Data using SparkSession

SparkSession simplifies reading data from various sources, like CSV, JSON, Parquet, and more:

val df = spark.read

.format("csv")

.option("header", "true")

.load("data.csv")Configuring Spark Properties

Configuring Spark’s runtime properties through SparkSession is essential for controlling various aspects of your Apache Spark application, such as memory allocation, parallelism, and logging levels. You can set these properties when creating a SparkSession or modify them later during the execution of your Spark application. Below are examples of how to configure some common Spark runtime properties using SparkSession.

In Apache Spark, you can retrieve all the configuration settings of a SparkSession using the conf property, which returns a SparkConf object. You can then use the getAll method of the SparkConf object to obtain all the settings as key-value pairs. You can set various Spark configuration properties using the config() method. Here’s an example in Scala:

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.appName("SparkTPoint.com")

.master("local[2]") // Use a local cluster with 2 cores

.config("spark.executor.memory", "2g")

.config("spark.executor.cores", "2")

.config("spark.shuffle.compress", "true")

.getOrCreate()

// Get all Spark configuration settings

val allSettings = spark.conf.getAll

// Display the settings

allSettings.foreach { case (key, value) =>

println(s"$key: $value")

}

// Don't forget to stop the SparkSession when you're done

spark.stop()

}

}Running this code will display all the configuration settings that have been set for the SparkSession, along with their corresponding values. This can be helpful for inspecting the runtime configuration of your Spark application.

Managing Catalog Metadata with SparkSession

In Apache Spark, you can access catalog metadata using the SparkSession object to query and manipulate information about tables, databases, and their schemas. Spark provides a built-in catalog called the “Hive Catalog” that can be used to manage and query metadata.

To list all available databases in the catalog:

val databases = spark.catalog.listDatabases()

databases.show()Creating Dataframes

Creating a DataFrame using SparkSession in Apache Spark is quite straightforward. You can create a DataFrame from an existing RDD, from a data source like a CSV file, or by specifying the schema and providing data. Here’s how to create a DataFrame using SparkSession:

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.getOrCreate()

val data = Seq(("John", 28), ("Emily", 24), ("Michael", 32))

val df = spark.createDataFrame(data)

df.show()

}

}

/* Output

+-------+---+

| _1| _2|

+-------+---+

| John| 28|

| Emily| 24|

|Michael| 32|

+-------+---+

*/Running SQL Queries

You can run SQL queries on DataFrames by registering them as temporary tables. Now, you can run SQL queries on the registered tables using spark.sql():

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.getOrCreate()

val data = Seq(("Alice", 25), ("Bob", 32), ("Charlie", 35))

val schema = Seq("name", "age")

val df = spark.createDataFrame(data).toDF(schema: _*)

// Register the DataFrame as a temporary table

df.createOrReplaceTempView("people")

// Run SQL queries

val result = spark.sql("SELECT name, age FROM people WHERE age > 30")

result.show()

}

}

/* Output

+-------+---+

| name|age|

+-------+---+

| Bob| 32|

|Charlie| 35|

+-------+---+

*/Hive Support in SparkSession

In Apache Spark, you can enable Hive support in a SparkSession by using the enableHiveSupport() method. Enabling Hive support allows you to use Hive’s metastore, HiveQL (Hive Query Language), and access Hive-managed tables and data sources within your Spark application. Here’s how to enable Hive support in a SparkSession:

val spark = SparkSession.builder()

.appName("EnableHiveSupportExample")

.enableHiveSupport() // Enable Hive support

.config("spark.sql.warehouse.dir", "/path/to/your/custom/warehouse/directory")

.getOrCreate()

// Run a HiveQL query

val result = spark.sql("SELECT * FROM your_hive_database.your_hive_table")

result.show()Saving and Reading from Hive table with SparkSession

You can save and read data from a Hive table using SparkSession in Apache Spark. Here’s how you can do it:

import org.apache.spark.sql.SparkSession

object SparkSessionApp {

def main(args: Array[String]) {

// Create a SparkSession

val spark = SparkSession.builder()

.master("local")

.appName("SparkTPoint.com")

.enableHiveSupport()

.getOrCreate()

// Create a DataFrame with some sample data

val data = Seq(("Alice", 25), ("Bob", 30), ("Charlie", 35))

val schema = Seq("name", "age")

val df = spark.createDataFrame(data).toDF(schema: _*)

// Save the DataFrame to a Hive table

val tableName = "employee"

df.write.mode("overwrite").saveAsTable(tableName)

// Read data from the Hive table into a DataFrame

val df1 = spark.table(tableName)

// Show the DataFrame

df1.show()

}

}

/* Output

+-------+---+

| name|age|

+-------+---+

| Alice| 25|

| Bob| 30|

|Charlie| 35|

+-------+---+

*/Essential Methods and Properties of SparkSession

The SparkSession class in Apache Spark provides various commonly used methods and properties for configuring and interacting with Spark. Here are some of the most commonly used methods and properties of SparkSession:

Creating a SparkSession:

SparkSession.builder(): Start building a newSparkSession.appName(name: String): Set the name of the Spark application.master(master: String): Set the Spark master URL (e.g., “local”, “yarn”, “spark://host:port”).config(key: String, value: String): Set Spark configuration options.enableHiveSupport(): Enable Hive support for Spark SQL.getOrCreate(): Get an existingSparkSessionor create a new one.

Data Reading and Writing:

read: Access theDataFrameReaderfor reading data from various sources (e.g., CSV, Parquet, JSON).write: Access theDataFrameWriterfor writing data to various formats (e.g., Parquet, JSON, JDBC).

Working with DataFrames and Datasets:

createDataFrame(data: Seq[_], schema: StructType): Create a DataFrame from a sequence of data and a schema.range(start: Long, end: Long, step: Long): Create a DataFrame with a range of values.sql(query: String): Execute a SQL query and return the result as a DataFrame.table(tableName: String): Retrieve a DataFrame representing a table registered in the catalog.createOrReplaceTempView(viewName: String): Register a DataFrame as a temporary SQL table.

Catalog and Hive Interaction:

catalog: Access theCataloginterface for managing databases, tables, and functions.

Stopping SparkSession:

stop(): Stop theSparkSessionand release resources.

These are some of the most commonly used methods and properties of SparkSession. Depending on your use case, you may use additional methods and configurations to customize your Spark application. SparkSession is a versatile tool for configuring and interacting with Spark, making it easier to work with structured and semi-structured data.

Conclusion

In conclusion, Apache SparkSession is a crucial component in Apache Spark that serves as the entry point and central configuration hub for Spark applications. It provides a unified interface for interacting with various Spark functionalities, including SQL processing, DataFrame and Dataset operations, and interactions with Hive.

Frequently Asked Questions (FAQs)

How do I create a SparkSession?

You can create a SparkSession using SparkSession.builder(). It allows you to set various configuration options and then call getOrCreate() to create or obtain an existing SparkSession.

How do I configure Spark properties using Spark Session?

You can configure Spark properties using the .config(key, value) method of SparkSession. This allows you to set various runtime properties like memory allocation, parallelism, and more.

Can I run SQL queries using SparkSession?

Yes, you can run SQL queries using SparkSession by using the .sql(query) method. You can execute SQL queries on DataFrames, temporary views, and registered tables.

What is the difference between DataFrames and Datasets in Apache Spark Session?

DataFrames and Datasets are both structured APIs in Spark. DataFrames are distributed collections of data organized into named columns, while Datasets are a strongly typed extension of DataFrames. Datasets allow you to work with data in a type-safe manner.

How can I enable dynamic resource allocation in Spark Session?

You can enable dynamic resource allocation by setting Spark configuration properties such as "spark.dynamicAllocation.enabled", "spark.dynamicAllocation.minExecutors", and "spark.dynamicAllocation.maxExecutors" using .config().

What is the recommended way to stop a SparkSession?

You should always stop a Spark Session when you’re done using it to release resources. Use the .stop() method to gracefully shut down the SparkSession.